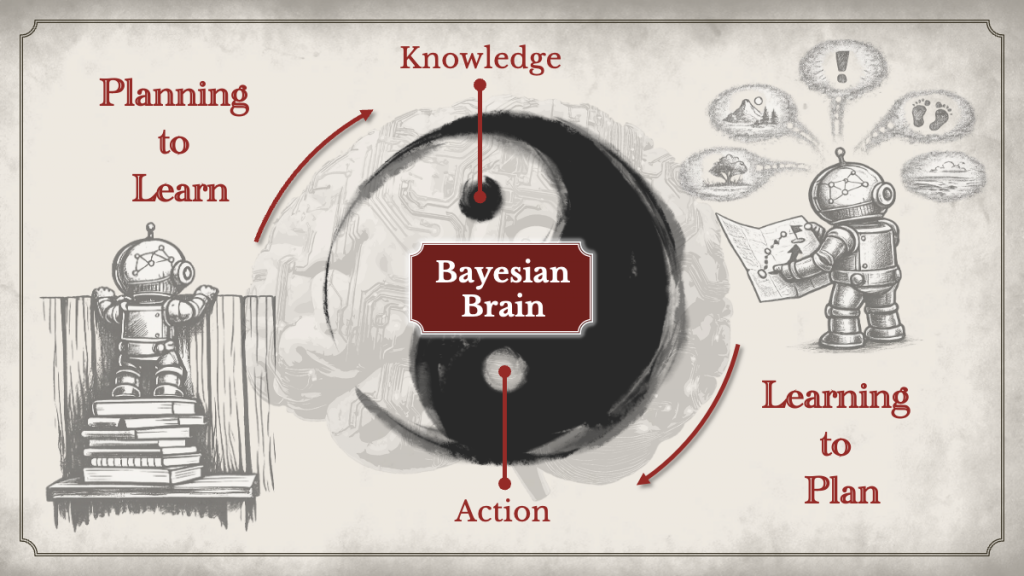

Knowledge–Action Unity with a Bayesian Brain

From fleets of rovers autonomously mapping Martian lava tubes to robotic teams navigating disaster zones and underwater vehicles exploring our oceans, the next frontier for robotics lies in the wild—in uncertain, unstructured, and unstable environments where human intervention is impossible. While their missions differ, these systems all share a universal, existential constraint: a finite energy budget. Once launched, they may remain in the wild for months or even years, and every action (e.g., sensing, moving, and even computing) is a withdrawal from a limited account. This brings a profound shift in challenges: data is sparse and expensive, environments are noisy and evolving, and above all, energy becomes a finite currency of survival. Current mainstream approaches, which often rely on extensive data, static models, and abundant computational power, struggle under these constraints. This led me to a fundamental inquiry:

How can we build robots that grow in the wild—capable of self-evolving with long-term autonomy in uncertain, unstructured, and unstable real-world scenarios?

I believe the answer lies in reverse-engineering the same principles behind human reasoning—Bayesian principles. We are natural Bayesians: we integrate new evidence with prior knowledge and choose actions that serve our goals in the face of uncertainty. Unlike traditional methods that separate learning and planning as disconnected modules, my work unifies them into a continual, four-step process under a universal energy constraint: 1) Inference; 2) Inference for Decision; 3) Decision; and 4) Decision for Inference. My research can thus be summarized as “4R for 4R”:

Reverse-engineering 4 continual Reasoning processes underlying Bayesian principles to create Reliable, Resilient, and Responsive Robots that grow in the wild.

Inference: Many Eyes, One Vision

In the wild, robots must first construct meaningful understandings of the world from noisy, asynchronous, and often heterogenous data. Consider a fleet of autonomous underwater vehicles (AUVs) exploring a vast seabed. One vehicle’s sonar detects a promising geological feature, another measures a faint chemical anomaly in the water, and a third senses a subtle temperature gradient. Every piece of information counts, but how can they be consistently combined into a single shared understanding, especially in the wild where perfect communication isn’t guaranteed?

My first line of work developed an algorithm for robot teams to effectively combine their different, partial, and noisy observations into a single shared understanding, even in the presence of unreliable communication.

- A Distributed Bayesian Data Fusion Algorithm with Uniform Consistency

Yingke Li, Enlu Zhou, and Fumin Zhang

IEEE Transactions on Automatic Control (TAC), vol. 69, no. 9, pp. 6176-6182, Sept. 2024 - Cognition Difference-based Dynamic Trust Network for Distributed Bayesian Data Fusion

Yingke Li, Ziqiao Zhang, Junkai Wang, Huibo Zhang, Enlu Zhou, and Fumin Zhang

International Conference on Intelligent Robots and Systems (IROS), pp. 10933-10938, Detroit, MI, USA, 2023 - Trust-Preserved Human-Robot Shared Autonomy enabled by Bayesian Relational Event Modeling

Yingke Li and Fumin Zhang

IEEE Robotics and Automation Letters (RA-L), vol. 9, no. 11, pp. 10716-10723, Nov. 2024 - Mixed Opinion Dynamics on the Unit Sphere for Multi-Agent Systems in Social Networks

Ziqiao Zhang, Yingke Li, Said Al-Abri, and Fumin Zhang

American Control Conference (ACC), pp. 4824-4829, Denver, CO, USA, 2025

Inference for Decision: Think just enough, just in time

With a shared understanding of the environment, robots must make timely decisions with limited battery life or processing power. This requires more than just choosing what to do—it demands choosing how much to think before doing. An AUV may make a high-level, energy-cheap decision like “survey that western ridge”, while deferring the costly, fine-grained motion planning until it is absolutely necessary. This saves immense energy and allows it to react to unexpected discoveries, like a sudden plume of superheated water, without costly replanning.

My second line of work created a planning framework that allows robots to make efficient decisions by balancing high-level, long-term goals with immediate, detailed actions, avoiding getting bogged down in unnecessary computation.

- Integrated Task and Motion Planning for Process-aware Source Seeking

Yingke Li, Mengxue Hou, Enlu Zhou, and Fumin Zhang

American Control Conference (ACC), pp. 527-532, San Diego, CA, USA, 2023 - An Interleaved Algorithm for Integration of Robotic Task and Motion Planning

Mengxue Hou, Yingke Li, Fumin Zhang, Shreyas Sundaram, and Shaoshuai Mou

American Control Conference (ACC), pp. 539-544, San Diego, CA, USA, 2023 - Dynamic Event-triggered Integrated Task and Motion Planning for Process-aware Source Seeking

Yingke Li, Mengxue Hou, Enlu Zhou, and Fumin Zhang

Autonomous Robots (AURO), vol. 48, no. 23, 2024

Decision: Where there is risk, there is caution

Strategic thinking “frees” our computational burden, but the price we pay for “freedom” is the model fidelity. This means that robots must take actions under imperfect models, and often in high-stakes situations. Imagine an AUV has a promising reading, but it is near a treacherous underwater canyon. A single bad decision, like entering the canyon with unknown currents to get a slightly better sensor reading, could lead to being caught in a vortex and its permanent loss.

My third line of research developed a control framework that enables robots to act safely and cautiously when their understanding of the world is incomplete or uncertain, preventing them from taking overconfident, risky actions.

- Bayesian Learning Model Predictive Control for Process-aware Source Seeking

Yingke Li, Tianyi Liu, Enlu Zhou, and Fumin Zhang

IEEE Control Systems Letters (L-CSS), vol. 6, pp. 692-697, 2022 - Risk-Aware Model Predictive Control Enabled by Bayesian Learning

Yingke Li, Yifan Lin, Enlu Zhou, and Fumin Zhang

American Control Conference (ACC), pp. 108-113, Atlanta, GA, USA, 2022 - Bayesian Risk-averse Model Predictive Control with Consistency and Stability Guarantees

Yingke Li, Yifan Lin, Enlu Zhou, and Fumin Zhang

IEEE Transactions on Automatic Control (TAC), under review

Decision for Inference: Stay pragmatic, stay curious

Excessive caution can paralyze learning, especially when precise models can only emerge through interaction with the world. In the wild, curiosity becomes essential—not as a luxury, but as a mechanism for learning what cannot be pre-specified. An AUV hiding in a safe, low-current zone will never find a hydrothermal vent. Knowledge about this wild world is not passive; it must be earned through action.

My last line of work completes the Bayesian reasoning loop by creating a paradigm where a robot’s curiosity (i.e., its drive to gather information) is seamlessly integrated with its need to accomplish goals, allowing it to learn efficiently without compromising its core objectives.

- Pragmatic Curiosity: Unifying Bayesian Optimization and Experimental Design via Active Inference

Yingke Li, Anjali Parashar, Enlu Zhou, and Chuchu Fan

Under review - Curiosity is Knowledge: Self-consistency and No-regret enabled by Active Inference

Yingke Li and Chuchu Fan

In preparation - Foresight with Curiosity: Active Inference for Non-Myopic Bayesian Optimization and Experimental Design

Yingke Li, Yuchao Li, Fei Chen, Dimitri Bertsekas, and Chuchu Fan

In preparation - SEED-SET: Scalable Evolving Experimental Design for System-level Ethical Testing

Anjali Parashar, Yingke Li, Eric Yang Yu, Fei Chen, James Neidhoefer, Devesh Upadhyay, and Chuchu Fan

Under review - Cost-aware Discovery of Contextual Failures using Bayesian Active Learning

Anjali Parashar, Joseph Zhang, Yingke Li, and Chuchu Fan

Conference on Robot Learning (CoRL), vol. 305, pp. 2239-2267, Seoul, Korea, 2025

Broader Impact and Vision

My vision is to build robots that can thrive in the unknown by treating uncertainty not as a barrier, but as the driver of intelligence. My work will enable long-term autonomy in domains where human access is limited, such as space exploration, disaster response, and deep-sea monitoring. By enabling robots to reason adaptively across scales, I aim to move beyond brittle, task-specific systems toward autonomous collectives capable of surviving and succeeding in unpredictable environments for months or years at a time. In doing so, my research contributes not only to the future of robotics, but also to a deeper scientific understanding of intelligence as a continuous, embodied, and Bayesian process.